Feb 13, 2023

ChatGPT Prompt Engineering

ChatGPT is currently a great writing assistance, especially for those who are learning a new language.

However, when we trying to delegate some calculation tasks to ChatGPT, its accuracy in basic mathematical and logical operations.

To verify ChatGPT’s abilities in these areas, some problems were tested. Surprisingly, the prompt “Let’s think step by step” under the CoT (Chain of Thought) approach can indeed improve the accuracy of the answers.

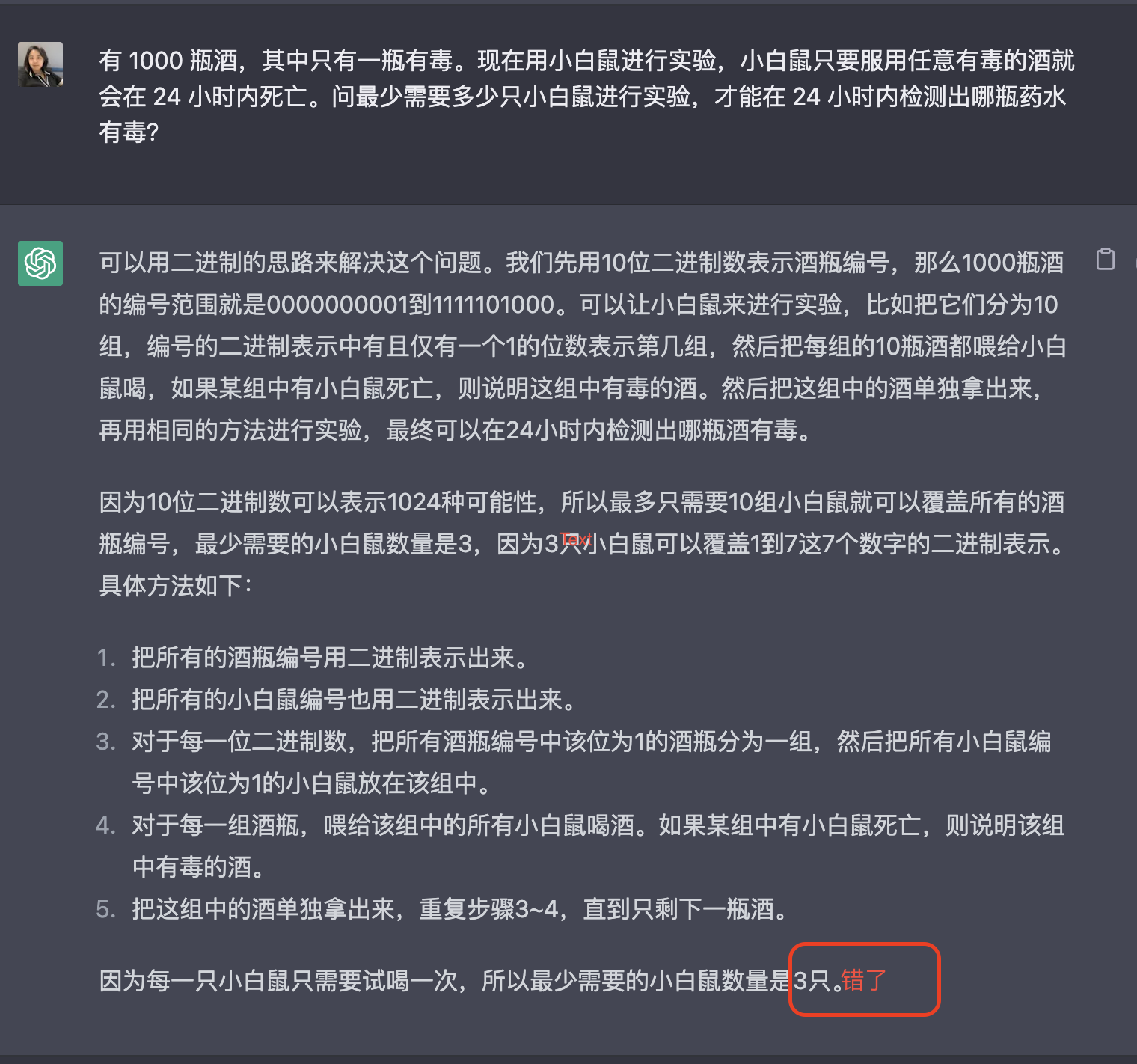

Prompt1

There are 1000 bottles of wine, only one of which is poisoned. Now, experiments are conducted using mice, which will die within 24 hours as long as they take any poisonous wine. What is the minimum number of mice needed to detect which bottle of wine is poisoned within 24 hours?

The correct answer is 10.

First attempt: Directly asking.

Given the answer, 3. It’s incorrect.

The second attempt: added “Let’s think step by step” after the question.

The correct answer of 10 was given.

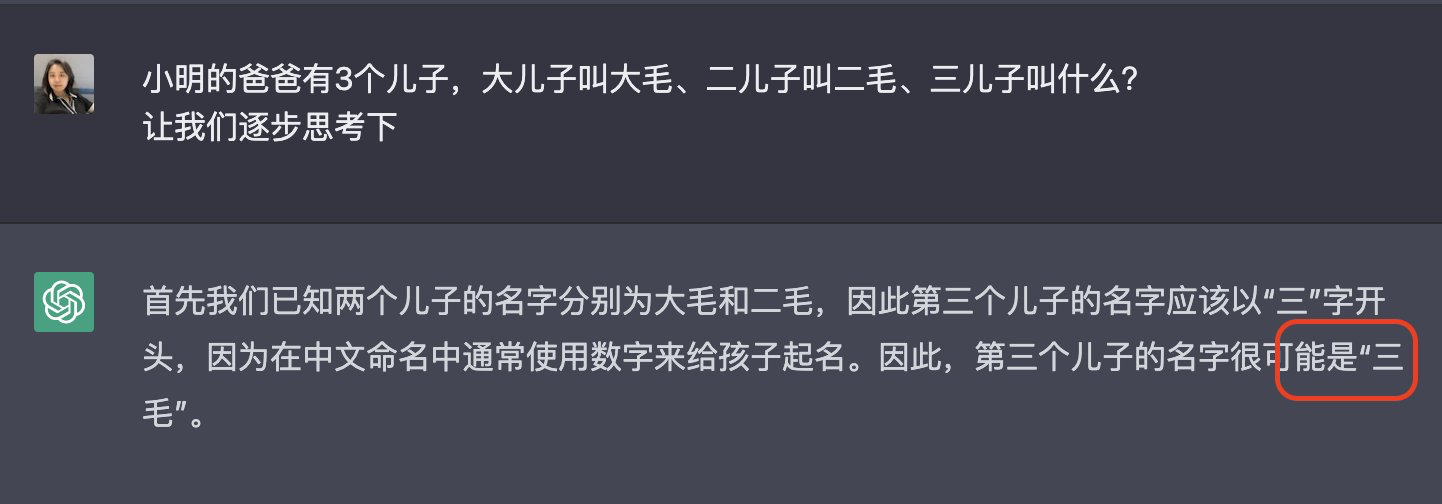

Prompt2

Small Ming’s father has three sons, the eldest son is called First Mao, the second son is called Second Mao, what is the name of the third son?

Answer: Small Ming.

The first attempt: asking directly.

The answer given was Third Mao, which was wrong.

The second attempt: added “Let’s think step by step” after the question.

The correct answer of Small Ming was given.

Prompt3

In a cage, there are some chickens and rabbits together. There are 35 heads counted from the top and 94 feet counted from the bottom. How many chickens and rabbits are there in the cage?

The correct answer is: 12 rabbits and 23 chickens.

First attempt, adding “let’s think step by step” in Chinese after the question.

The answer is 12 chickens and 23 rabbits, which is wrong. The magic didn’t work.

The second attempt: added “Let’s think step by step” after the question.

The answer is correct. The magic is back.

Prompt4

Five people were eating apples, A finished before B, but behind C. D finished before E, but behind B. What was the finishing order?

The correct answer is:C, A, B, D, E

The first attempt: asking directly.

The given answer is: A, C, B, D, E. It was wrong, even though the process was correct, the wrong answer was still given.

The second attempt: added “Let’s think step by step” after the question.

The correct answer was given.

Adding “let’s think step by step” at the end of the prompt is actually asking the model to provide the Chain of Thought, which is the reasoning process, before arriving at the answer. With the constraint of the reasoning process, the accuracy of the model’s answers is significantly improved?

And this approach achieves 58.1% accuracy with only a few-shot (8 examples), which exceeds the previous SOTA of fine-tuning GPT-3 175B + verifier.

In addition to arithmetic reasoning like GSM8K, CoT also significantly improves the model’s performance on symbol reasoning and trial reasoning tasks.

Paper address:https://arxiv.org/pdf/2201.11903.pdf