Feb 14, 2023

MIT 14.01 Principles for Microeconomics

#微观经济学# #2023 阅读#

MIT 14.01 Principles fo Microeconomics

YouTube: https://t.co/OR3UsByAfG

Syllabus: https://t.co/RS7hYdR3w1

L2 Preferences and Utility Functions

3 prefrence assumption:

- completenesss. 完整性。you have prefrences over any set of goods you might choose from. you can’t say “I don’t care” or “ I don’t know”; That’s indifference.

- transitivity. 传递性。A>B, B>C; A>C;

- Nonsatiation; 不满足假设。you always want more.

Indifference curves 无差异曲线; graphical maps of preferences.

L20 Uncertainty

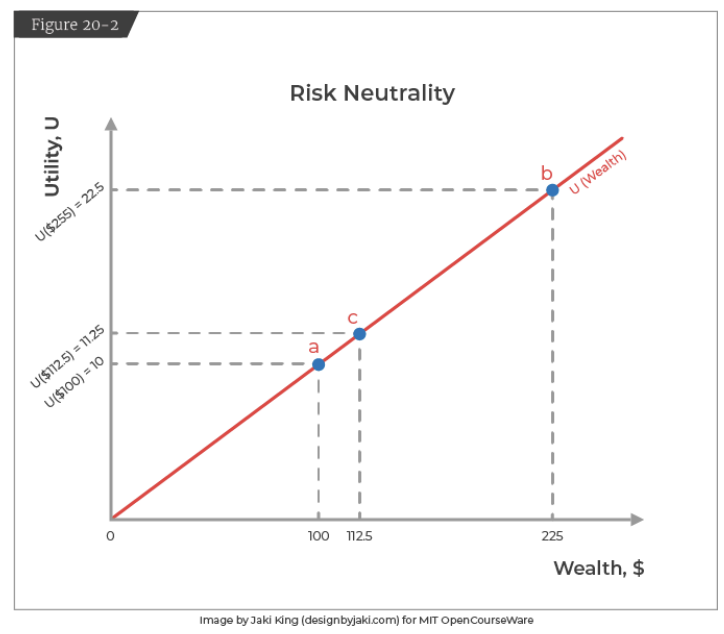

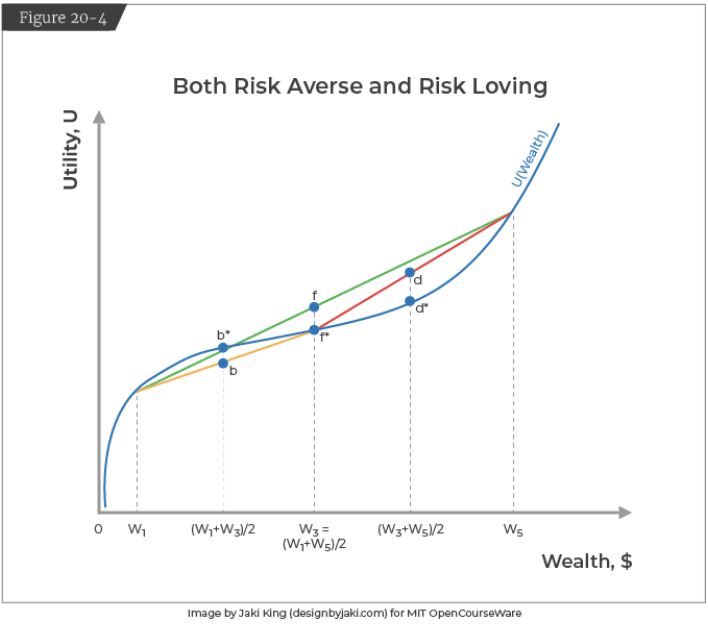

- Expected Value